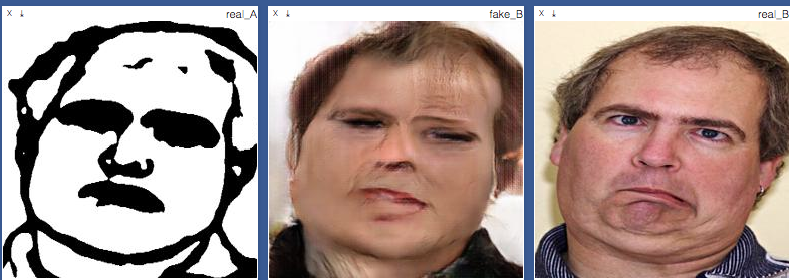

Confronted is a project in collaboration with Zaria Howard and Tatyana Mustakos. What would happen if you were meant to face the scary AI-laced creations that you make? We wanted to recontextualize the sketch2face research, to reimagine the relationship between the sketcher and the sketched humans. This manifests as a sketch2face implementation in VR.

Zaria created the dataset that this was all based off of. She took this Helen1 dataset that had faces detected and vectorized dataset.

She then ran holistic edge detection on the faces with the line drawing face overlaid to emphasize the face, then finally still some of the faces were not showing up in the result so we overlaid the line drawing face over the edge pictures.

I then set up and ran pix2pix in pytorch on that dataset, for 200 epochs.

Tatyana set up a drawing program in Unity that I hooked up to the Occulus. This allowed the user to draw a face, or whatever they wanted, in VR and then hit a button to save a picture of their sketch, then using ZMQ and numpy to format, send it over to a computer with the trained pix2pix model on, which would then process the image and send it back to the computer running the unity project.

I posted the server code here and the client code here

By no means is this code pretty but it gets the job done.

There is a good reason to consider and nurture sketch to human relationships.

Thanks to Art and ML class taught by Dr. Eunsu Kang, Dr. Barnabas Poczos and

TA Jonathan Dinu, for they provided the prompt, Aman Tiwari, for helping with the pytorch model, and Golan Levin for additional advising.