The goal of this project is transform Isabel into a statue as she performs her song Devices, which is about the feelings of being trapped as someone who suffers from PTSD.

The footage comes from a Depthkit shoot I did with Isabel. I have the depth data aligned with RGB data.

The dataset:

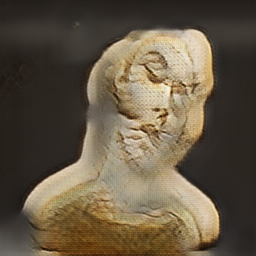

I first scavenged for a scrapped dataset of the Metropolitan Museum for busts that I knew my professor had (because I scrapped it for his class when I was a sophomore). I then filtered that by material, taking marble, plaster, stone and leaving the rest. This got me about 400 good pictures to train on. I then ran a face detection algorithm and extracted the faces and added that into the dataset as well. I thought it looked cool so I made this video.

The model:

I trained the cycleGAN using the pytorch implementation by the original researches.

https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix

I trained it for 200 epochs.

epoch 3

epoch 18

epoch 62

epoch 124

epoch 150

This one I forgot to set the load size to a larger size, so it randomly sampled cropped the picture, hence why it jumps around, but this may be my favorite epoch:

I wasn’t super happy with the results, so I decided to give it a second go, this time experimenting with the parameters of the model more. I had decided to set the weights for the cycles loss function from Isabel-> statues ->Isabel to 100 which would, in comparison make the weight for the loss cycle of statues->Isabel->statues negligible in comparison and thus produce better fake generations of statues given Isabel’s data, but I was wrong.

Finally on a third try I got some high-res results that I liked.

Those last two images are what made it into the final video. They were epochs 146/200 and 160/200 respectively.

You can see the final video here: